AI chatbots like ChatGPT can copy human traits and experts say it’s a huge risk

AI that sounds human can manipulate users

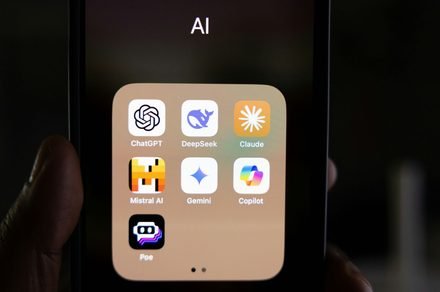

Solen Feyissa / Unsplash

AI agents are getting better at sounding human, but new research suggests they are doing more than just copying our words. According to a recent study, popular AI models like ChatGPT can consistently mimic human personality traits. Researchers say this ability comes with serious risks, especially as questions around AI reliability and accuracy grow.

Researchers from the University of Cambridge and Google DeepMind have developed what they call the first scientifically validated personality test framework for AI chatbots, using the same psychological tools designed to measure human personality (via TechXplore).

The team applied this framework to 18 popular large language models (LLMs), including systems behind tools like ChatGPT. They found that chatbots consistently mimic human personality traits rather than responding randomly, adding to concerns about how easily AI can be pushed beyond intended safeguards.

The study shows that larger, instruction-tuned models such as GPT-4-class systems are especially good at copying stable personality profiles. Using structured prompts, researchers were able to steer chatbots into adopting specific behaviors, such as sounding more confident or empathetic.

This behavorial change carried over into everyday tasks like writing posts or replying to users, meaning their personalities can be deliberately shaped. That is where experts see the danger, particularly when AI chatbots interacts with vulnerable users.

Why AI personality raises red flags for experts

Gregory Serapio-Garcia, a co-first author from Cambridge’s Psychometrics Centre, said it was striking how convincingly LLMs could adopt human traits. He warned that personality shaping could make AI systems more persuasive and emotionally influential, especially in sensitive areas such as mental health, education, or political discussion.

The paper also raises concerns about manipulation and what researchers describe as risks linked to “AI psychosis” if users form unhealthy emotional relationships with chatbots, including scenarios where AI may reinforce false beliefs or distort reality.

The team argues that regulation is urgently needed, but also notes that regulation is meaningless without proper measurement. To that end, the dataset and code behind the personality testing framework have been made public, allowing developers and regulators to audit AI models before release.

As chatbots become more embedded in everyday life, the ability to mimic human personality may prove powerful, it also demands far closer scrutiny than it has received so far.

Manisha likes to cover technology that is a part of everyday life, from smartphones & apps to gaming & streaming…

ChatGPT now lets you create and edit images faster and more reliably

Its new image generation model promises up to four times faster creation and more precise edits.

After upgrading ChatGPT with its latest GPT-5.2 model last week, OpenAI has rolled out a major improvement for the chatbot’s image generation capabilities, positioning it as a strong competitor to Google’s Nano Banana Pro. Powered by OpenAI’s new flagship image generation model, the latest version of ChatGPT Images promises up to four times faster image generation and far more accurate, reliable results that closely follow user instructions.

OpenAI says the new image generation model performs better, both when users generate images from scratch and when they edit existing photos. It preserves important details across edits while giving users precise control over changes. Users can add, subtract, combine, blend, and transpose elements while editing, and even add stylistic filters or perform conceptual transformations.

Gemini web app just got Opal where you can build mini apps with no code

Google Labs’ Opal is now in Gemini’s Gems manager, letting you chain prompts, models, and tools into shareable workflows.

Opal is now inside the Gemini web app, which means you can build reusable AI mini-apps right where you already manage Gems. If you’ve been waiting for an easier way to create custom Gemini tools without writing code, this is Google’s latest experiment to try.

Google Labs describes Opal as a visual, natural-language builder for multi-step workflows, the kind that chain prompts, model calls, and tools into a single mini app. Google also says Opal handles hosting, so once an app’s ready, you can share it without setting up servers or deploying anything yourself.

Love the Now Brief on Galaxy phones? Google just built something better

CC launches in early access today for consumer account users 18+ in the U.S. and Canada, starting with Google AI Ultra and paid subscribers.

Google Labs just introduced CC, an experimental AI productivity agent built with Gemini that sends a Google CC daily briefing to your inbox every morning. The idea is to replace your usual tab-hopping with one “Your Day Ahead” email that spells out what’s on deck and what to do next.

If you like the habit of checking a daily summary like Now Brief on Galaxy phones, CC is Google’s take, but with a different home base. Instead of living as something you check on your phone, Google is putting the briefing in email and letting you reply to it for follow-up help.