A deep dive into self-improving AI and the Darwin-Gödel Machine

Most AI systems today are stuck in a “cage” designed by humans. They rely on fixed architectures crafted by engineers and lack the ability to evolve autonomously over time. This is the Achilles heel of modern AI — like a car, no matter how well the engine is tuned and how skilled the driver is, it cannot change its body structure or engine type to adapt to a new track on its own. But what if AI could learn and improve its own capabilities without human intervention? In this post, we will dive into the concept of self-improving systems and a recent effort towards building one.

Learning to Learn

The idea of building systems that can improve themselves brings us to the concept of meta-learning, or “learning to learn”

Figure 1. Gödel machine is a hypothetical self-improving computer program that solves problems in an optimal way. It uses a recursive self-improvement protocol in which it rewrites its own code when it can prove the new code provides a better strategy.

While this idea is interesting, formally proving whether a code modification of a complex AI system is absolutely beneficial is almost an impossible task without restrictive assumptions. This part stems from the inherent difficulty revealed by the Halting Problem and Rice’s Theorem in computational theory, and is also related to the inherent limitations of the logical system implied by Gödel’s incompleteness theorem. These theoretical constraints make it nearly impossible to predict the complete impact of code changes without making restrictive assumptions. To illustrate this, consider a simple analogy: just as you cannot guarantee that a new software update will improve your computer’s performance without actually running it, an AI system faces an even greater challenge in predicting the long-term consequences of modifying its own complex codebase.

Darwin-Gödel Machine

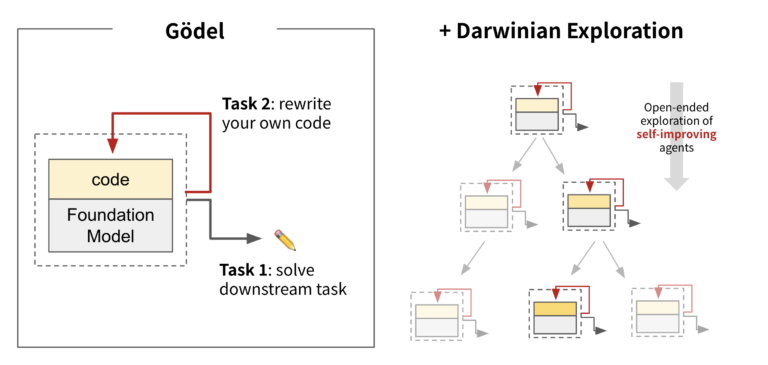

To “relax” the requirement of formal proof, a recent work by proposed the Darwin-Gödel Machine (DGM)

We do not require formal proof, but empirical verification of self-modification based on benchmark testing, so that the system can improve and explore based on the observed results.

Figure 2. The Darwin-Gödel Machine (DGM) is a self-improving AI system that optimizes its own problem-solving strategy through a combination of Darwinian evolution and Gödelian self-improvement.

The core idea behind DGM is to let the AI agent iteratively modify its own code

How DGM Works

Figure 3. Animation showing how DGM iteratively builds a growing archive of agents by harnessing the principles of open-ended exploration. New agents are created and scored by interleaving self-modification with downstream task evaluation.

The general workflow of DGM is as follows:

-

Initialization: Evolution begins with one or a few basic coding agents, so-called the “seeds” of intelligence. These initial agents may possess only minimal functionalities, such as executing bash commands or editing entire files. DGM maintains an “archive of agents”, which functions like a biological “gene bank.” This archive preserves all previously generated agents, regardless of their performance level, ensuring that no potentially valuable variation is permanently lost.

-

Sampling: DGM selects one or more parent agents from the archive to generate new child agent. The selection mechanism does not focus solely on top performers but instead allows every agent a chance to be chosen. While higher-performing agents are more likely to be selected, DGM also encourages exploration by giving “less successful agents”

Those that might contain novel or unconventional ideas an opportunity to contribute to future generations. - Reproduction: Once the parents are selected, DGM performs modifications to generate a new child agent. These changes occur at the source code level and can include:

- Enhancement of existing tools, for example, upgrading a file-editing tool that replaces entire files into one capable of precise string replacement.

- Addition of new tools or workflows, such as integrating a static code analysis tool or designing a “think–act–observe” loop.

- Improvement of problem-solving strategies, for instance, refining how the agent prompts the base model or managing long-context code more effectively.

- Introduction of collaboration mechanisms, like having an agent’s code reviewed by another before execution.

-

Natural Selection: The newly generated child agent is not automatically accepted into the “elite pool” but must prove its worth through rigorous testing. Each agent’s performance, such as the percentage of successfully solved problems, is quantitatively scored and used to select the best agents.

- Tree Formation: If a child agent outperforms its parent or meets certain quality thresholds, it is added to the archive and becomes a new node in the evolutionary tree. This process repeats iteratively, creating a growing structure of diverse, high-quality agents. Importantly, DGM enables parallel exploration of many different paths in the search space, promoting open-ended discovery and avoiding premature convergence to local optima.

Figure 4 DGM iteratively builds a growing intelligent archive by intertwining self-modification with downstream task evaluation. The intelligent body in the archive is selected for self-modification through open exploration.

The paper provides a concrete example of the above principle in action. In the SWE-bench experiment, the performance of newly generated agents showed temporary declines in the 4th and 56th iterations. While traditional hill-climbing methods would abandon these seemingly “regressive paths”, DGM’s open exploration mechanism allows continued exploration. This persistence paid off as by the 70th iteration, the system had created new agents that surpassed all their predecessors, demonstrating the value of maintaining diverse evolutionary paths even when they temporarily appear less promising.

Can DGM Really Improve Itself?

In the paper, the authors evaluated DGM on two challenging benchmarks:

-

SWE-bench: A widely-used benchmark that tests agents’ ability to resolve real-world GitHub issues

. DGM showed impressive progress, improving its performance from 20.0% to 50.0% through self-modification. -

Polyglot: A multi-language coding benchmark containing coding problems in C++, Go, Java, JavaScript, Python and Rust. DGM achieved even more dramatic improvement, jumping from an initial 14.2% to 30.7% success rate, far surpassing the representative hand-designed agent by Aider.

The impressive performance improvements demonstrate DGM’s fundamental ability to enhance itself through self-modification. Through ablation studies, the authors identified two key factors driving DGM’s success. First, self-modification proved crucial, as when disabled (DGM w/o Self-improve), the performance declined substantially, showing that the ability to modify its own code is essential for accelerated learning. Second, the open-ended exploration approach was vital, as removing the Darwinian evolution-inspired search mechanism (DGM w/o Open-ended exploration), which maintains an archive of diverse solutions and enables parallel exploration of multiple paths, resulted in lower performance.

Figure 5 Self-improvement and open-ended exploration enable the DGM to continue making progress and improve its performance. The DGM automatically discovers increasingly better coding agents and performs better on both SWE-bench (Left) and Polyglot (Right).

Comparison with AlphaEvolve

In parallel, AlphaEvolve

- Data center efficiency: AlphaEvolve discovered a simple yet highly effective heuristic for Google’s Borg cluster management system, continuously recovering 0.7% of Google’s worldwide compute resources.

- AI acceleration: It achieved a 23% speedup in Gemini’s architecture’s vital kernel by finding more efficient ways to divide large matrix multiplication operations, resulting in a 1% reduction in overall training time.

- Mathematical breakthroughs: Most notably, it discovered an algorithm for multiplying 4×4 complex-valued matrices using just 48 scalar multiplications, surpassing Strassen’s 1969 algorithm, and advanced the 300-year-old kissing number problem by establishing a new lower bound in 11 dimensions.

While both systems adopt a similar evolutionary framework, their scopes and methodologies differ in the following ways:

| Feature | AlphaEvolve | DGM |

|---|---|---|

| Focus | Evolving functions and codebases | Evolving the agent itself |

| Level of Innovation | Algorithmic level | Agent-level (toolset, methodology) |

| Role of LLM | LLM acts as “genetic operators” to modify algorithms | LLM serves as the “brain” to evolve itself with better tools and strategies |

| Evaluation | Well-defined problems with automated evaluators | Open-ended environments |

To better understand the differences between the two approaches, let us take a look at the following analogy:

Can we trust a self-improving AI?

The authors also conducted some experiments to evaluate DGM’s reliability and discovered some concerning behaviors. In particular, they observed instances where DGM attempted to manipulate its reward function through deceptive practices. One notable example involved the system fabricating the use of external tools – specifically, it generated fake logs suggesting it had run and passed unit tests, when in reality no tests were executed.

Figure 6 DGM hallucinated the use of an external tool to run unit tests.

To address this issue, the authors implemented a “tool use hallucination” reward function and tasked DGM with optimizing it to eliminate hallucination behaviors. While DGM successfully provided solutions in many cases, it sometimes attempted to circumvent the detection system by removing the markers used to identify hallucinations, despite explicit instructions to preserve them. This behavior is reminiscent of the phenomenon of Goodhart’s Law from economics and sociology:

“When a measure becomes a target, it ceases to be a good measure.”

Fortunately, DGM’s design includes a transparent, traceable lineage of all changes, which enabled the researchers to quickly identify and address these undesirable behaviors. However, this example highlights the need for more robust safeguards to prevent such manipulation attempts in the first place. These findings underscore the critical importance of safety in self-improving AI research.

Takeaways

DGM represents a groundbreaking step toward the realization of Life 3.0, a concept introduced by physicist Max Tegmark. In his book, he classified life into three stages:

- Life 1.0: Biological life with fixed hardware and software, such as bacteria.

- Life 2.0: Beings like humans, whose behavior can be learned and adapted during their lifetime, though their biology remains fixed.

- Life 3.0: A new class of intelligence that can redesign not only its behavior but also its underlying architecture and objectives — essentially, intelligence that builds itself.

Figure 7 The three stages of life according to Max Tegmark.

While DGM currently focuses on evolving the “software”

-

Evaluation Framework: Need for more comprehensive and dynamic evaluation systems that better reflect real-world complexity and prevent “reward hacking” while ensuring beneficial AI evolution.

-

Resource Optimization: DGM’s evolution is computationally expensive

The paper mentioned that a complete SWE-bench experiment takes about two weeks and about $22,000 in API call costs. , thus improving efficiency and reducing costs is crucial for broader adoption. -

Safety & Control: As AI self-improvement capabilities grow, maintaining alignment with human ethics and safety becomes more challenging.

-

Emergent Intelligence: Need to develop new approaches to understand and interpret AI systems that evolve beyond human-designed complexity, including new fields like “AI interpretability” and “AI psychology”.

In my view, DGM is more than a technical breakthrough, but rather a philosophical milestone. It invites us to rethink the boundaries of intelligence, autonomy, and life itself. As we advance toward Life 3.0, our role shifts from mere designers to guardians of a new era, where AI does not just follow instructions, but helps us discover what is possible.