Early tests suggest ChatGPT Health’s assessment of your fitness data may cause unnecessary panic

Experts say the tool isn’t ready to provide reliable personal health insights.

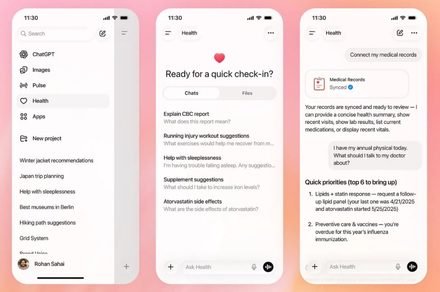

Earlier this month, OpenAI introduced a new health focused space within ChatGPT, pitching it as a safer way for users to ask questions about sensitive topics like medical data, illnesses, and fitness. One of the headline features highlighted at launch was ChatGPT Health’s ability to analyze data from apps like Apple Health, MyFitnessPal, and Peloton to surface long term trends and deliver personalized results. However, a new report suggests OpenAI may have overstated how effective the feature is at drawing reliable insights from that data.

According to early tests conducted by The Washington Post‘s Geoffrey A. Fowler, when ChatGPT Health was given access to a decade’s worth of Apple Health data, the chatbot graded the reporter’s cardiac health an F. However, after reviewing the assessment, a cardiologist called it “baseless” and said the reporter’s actual risk of heart disease was extremely low.

Dr. Eric Topol from the Scripps Research Institute offered a blunt assessment of ChatGPT Health’s capabilities, saying the tool is not ready to offer medical advice and relied too heavily on unreliable smartwatch metrics. ChatGPT’s grade leaned heavily on Apple Watch estimates of VO2 max and heart rate variability, both of which have known limitations and can vary significantly between devices and software builds. Independent research has found Apple Watch VO2 max estimates often run low, yet ChatGPT still treated them as clear indicators of poor health.

ChatGPT Health gave different grades for the same data

The problems did not stop there. When the reporter asked ChatGPT Health to repeat the same grading exercise, the score fluctuated between an F and a B across conversations, with the chatbot sometimes ignoring recent blood test reports it had access to and occasionally forgetting basic details like the reporter’s age and gender. Anthropic’s Claude for Healthcare, which also debuted earlier this month, showed similar consistencies, assigning grades that shifted between a C and a B minus.

Both OpenAI and Anthropic have stressed that their tools are not meant to replace doctors and only provide general context. Still, both chatbots delivered confident, highly personalized evaluations of cardiovascular health. This combination of authority and inconsistency could scare healthy users or falsely reassure unhealthy ones. While AI may eventually unlock valuable insights from long term health data, early testing suggests that feeding years of fitness tracking data into these tools currently creates more confusion than clarity.

Pranob is a seasoned tech journalist with over eight years of experience covering consumer technology. His work has been…

Your Google Photos photo to video clips can now include sound

Photo to video adds text prompts, editable suggestions, and automatic audio for new clips

Google Photos just updated Photo to video so you can turn a still image into a short AI clip with more control. Instead of sticking to presets, you can now type a prompt that describes the motion, style, or overall vibe you want.

It’s built for quick results. Google says it only takes a few moments to generate a clip, and you can save it straight to your library when it’s done.

This NEXTGEAR Clear Shift PC lets you switch from showpiece to stealth

Clear Shift changes the look of the chassis on demand, it targets buyers who like see through cases but want an easy way to tone it down fast.

NEXTGEAR is betting that not everyone wants their desktop’s guts on display all day. The new NEXTGEAR Clear Shift builds are designed to go from full-on showcase lighting to a calmer look, so the inside doesn’t dominate your desk setup.

It’s the same see-through appeal, just with a built-in escape hatch. Hit the switch and the vibe changes.

AI chatbot hype is real, but daily use at work remains limited

Inside the gap between AI talk and AI use

AI agents are everywhere right now. They write emails, draft code, summarise documents, and promise to make work faster and smarter. From boardrooms to classrooms, the hype makes it sound like artificial intelligence has already become a daily work companion for most people, even as studies suggest AI is still not quite ready for everyday office work.

A new Gallup survey suggests the reality inside workplaces is quite different. According to Gallup’s latest Workforce survey, only 12% of employed adults in the US say they use AI every day at work. About one quarter use it frequently, meaning a few times a week, while nearly half of them say they use AI only a few times a year.